3.4 Syntax

Dinesh Ramoo

Now that we are familiar with the units of sound, articulation and meaning, let us explore how these are put together in connected speech. Syntax is the set of rules and process that govern sentence structure in a language. A basic description of syntax would be the sequence in which words can occur in a sentence. One of the earliest approaches to syntactic theory comes from the works of the Sanskrit grammarian Pāṇini (c. 4th century BC) and his seminal work: Aṣṭādhyāyī. While the field has diversified into many schools, we will look at some basic issues of syntax and look at the contributions of Noam Chomsky.

Living Language

Look at these two sentences and decide which one seems normal to you:

- Paul gave Mary a new book.

- Paul new a book Mary gave.

Why is one not considered correct even though it contains all the same words? Can you articulate the rules that govern your decision or are they intuitive?

Grammar employs a finite set of rules to generate the infinite variety of output in a language. This is the basis for generative grammar. Chomsky argued for a system of sentence generation that took into account the underlying syntactic structure of sentences. He emphasised the native intuition of any native speaker of a language to identity ill-formed sentences in that language. The speaker may not be able to provide a rationale for why some sentences are acceptable and other are not. However, it cannot be denied that such intuitions exist in every person. While Chomsky’s ideas have evolved over the years, the main conclusions appear to be that language is a rule-based system and a finite set of syntactic rules can capture our knowledge of syntax.

A key aspect of language is that we can construct sentences with words using a set of finite rules. Phrase-structure rules are a way to describe how words can be combined into different structures. Sentences are constructed from smaller units. If s sentence is designated as S, we can use rewrite rules to translate other symbols such as noun phrases (NP) and verb phrases (VP) as in:

S → NP + VP

Phrase-structure grammar has word (terminal elements) and other constituent parts (non-terminal elements). This means that words usually form the lowest part of a sentences building up towards a sentence. The rules that we use to construct these sentences do not deal with individual words but classes of words. Such classes include words that name objects (nouns), words for actions (verbs), words that describe nouns (adjectives), and words that qualify actions (adverbs). We can also think of words that determine number such as ‘the’, ‘a’ and ‘some’ (determiners), words that join constituents such as ‘and’ and ‘because’ (conjunctions), words that substitute for a noun or noun phrase as in ‘I’ and ‘she’ (pronouns), and words that express spatial or temporal relations as in ‘on’ and ‘on’ (prepositions).

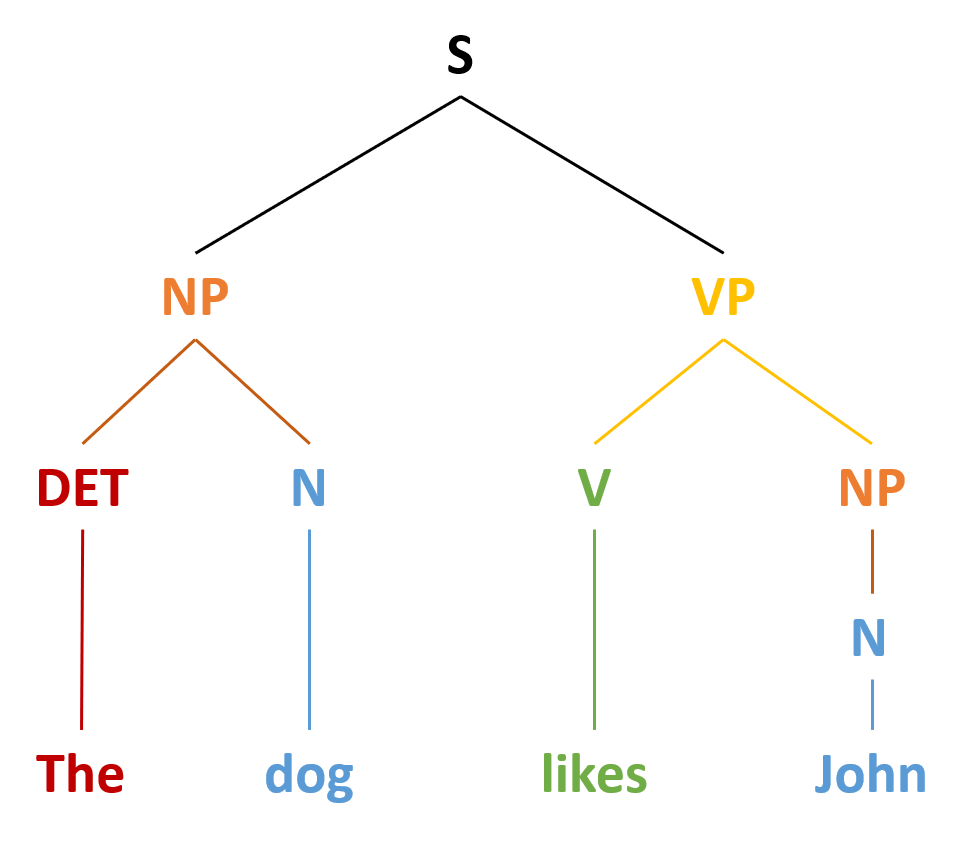

These types of words combine to form phrases. Such phrases that can take the part of nouns in sentences are called noun phrases. So ‘dog,’ ‘the dog’ or ‘the naughty dog’ are all noun phrases because they can fill the gap in a sentence such as ‘_____ ran through the park’. Phrases combine to form clauses. These contain a subject (what we are talking about) and a predicate (information about the subject). Every clause has to have a verb and sentences can consist of one or more clauses. As we see in Figure 3.6, the sentence ‘the dog likes John’ consists of one clause composed of a noun phrase and a verb phrase. It contains a subject ‘the dog,’ a verb ‘likes,’ and an object ‘John.’

One way to think about how sentences are organized in the mind is through a notation called a tree diagram. They are called tree diagrams because they branch from a single point into phrases which in turn branch into words. Each place where the branches come together is called a node. A node indicates a set of words that act together as a unit or constituent. Consider Figure 3.6 which illustrates how a sentence can be depicted in a tree diagram.

Word Order in Different Languages

The order of the syntactic constituents varies between languages. When talking about word order, linguists generally look at 1) the relative order of subject, object and verb in a sentence (constituent order), 2) the order modifiers such as adjectives and numerals in a noun phrase, and 3) the order of adverbials. Here we will focus mostly on constituent word order.

English sentences generally display a word order consisting of subject-verb-object (SVO) as in ‘the dog [noun] likes [verb] John [object]’. Mandarin and Swahili are other examples of SVO. About a third of all languages have this type of word order (Tomlin, 1986). About half of all languages employ subject-object-verb (SOV). Japanese, Turkish as well as the Indo-Aryan and Dravidian languages of India are examples of SOV word order. Classical Arabic and Biblical Hebrew as well as the Salishan languages of British Columbia employ verb-subject-object (VSO). Rarer are typologies such as verb-object-subject (VOS) as is found in Algonquin. Unusual word ordering can be employed for dramatic effect as in the object-subject-verb (OSV) word order of Yoda from Star Wars: ‘Powerful (object) you (subject) have become (verb). The dark side (O) I (S) sense (V) in you.’

The Neurolinguistics of Syntax and Semantics

We know that a sentence’s syntax has an influence on how its meaning is interpreted (semantics of the sentence). Any given string of words can have different meanings if they have different syntactic structures. However, syntax doesn’t necessarily need to be in line with semantics. Chomsky (1957) famously composed a sentence that was syntactically correct but semantically meaningless: “colorless green ideas sleep furiously.” The sentence is devoid of semantic content, but it is a perfectly grammatical sentence in English. The words “*Furiously sleep ideas green colorless” are the same but their order would not be considered grammatical by a native English speaker.

We have psycholinguistic evidence from electroencephalography to support the idea that syntax and semantics are processed independent of each other. In measuring event related potentials (ERPs) for sentences there are some interesting observations. For example, the sentence “He eats a ham and cheese …” sets up a very strong expectation in your mind about what words comes next. If the word that comes next is in line with your expectations, the ERP signal will be a baseline condition. However, if the next word violates your expectations, then we often see a sudden negative spike in the EEG voltage around 400ms after the unexpected word. This ERP signal is called an N400 (where the N stands for negative and 400 indicates the approximate timing of the ERP after the stimulus). Numerous studies have found an N400 response when a semantically unexpected word is inserted into a sentence.

However, not every unexpected word elicits an N400 response. In some cases, where the unexpected word belongs to an unexpected word category (for example, a verb instead of a noun), we see a positive voltage around 600ms after the unexpected word. This is known as a P600. Therefore, we see that violations of semantic expectations elicit an N400 while violations of syntactic expectations elicit a P600. This suggests that syntax and semantics are independently processed n our brains.

Image description

Figure 3.6 Sentence Structure in English

The sentence “the dog likes John” consists of a noun phrase “the dog,” and a verb phrase “likes John.” The noun phrase is consisted of a determiner “the” and a noun “dog.” The verb phrase is consisted of a verb “likes,” and a noun phrase “John.”

[Return to place in the text (Figure 3.6)]

Media Attribution

- Figure 3.6 Sentence Structure in English by Dinesh Ramoo, the author, is licensed under a CC BY 4.0 licence.

The study of how words and morphemes combine to create larger phrases and sentences.

A linguistic theory that looks at linguistics as the discovery of innate grammatical structures.

A type of rewrite rule used to define a language’s syntax.

A syntactic unit that has a noun as its head.

A syntactic unit composed of at least one verb and its dependents.

Symbols that may appear as the output of grammatical rules.

Symbols or elements that can be replaced.

A word that refers to a thing, a person, a place, an animal, a quality, or an action.

A word that conveys action or a state of being.

A word that modifies a noun or noun phrase.

A word that modifies a verb, adjective, determiner, clause, preposition or sentence.

A word that determines the type of reference a noun or noun group.

A word used to connect clauses or sentences.

A word that can stand in for a noun or noun phrase.

A category of words that can express spatial or temporal relations or mark semantic roles.

A group of words that act as a grammatical unit.

A group of words that contain a subject and a predicate within a complex or compound sentence.

The person or thing about which a statement is made.

Everything in a declarative sentence other than the subject.

In subject-prominent languages, a noun that is distinguished by a transitive verb from the subject.

The smallest unit of language that conveys a particular meaning. A word can be made up of one or more morphemes.

A location in a diagram.

A word or group of words that can function as a single unit.

The order of words in a sentence.

A component of event related potentials that is a negative peak around 400 milliseconds after the onset of a stimulus.

A component of event related potentials that is a positive peak around 600 milliseconds after the onset of a stimulus.